Linux vmalloc原理与实现

文章目录

- 前言

- 一、vmalloc 原理

- 1.1 vmalloc space

- 1.2 vmalloc实现

- 1.3 __vmalloc_node_range

- 1.3.1 __get_vm_area_node

- 1.3.2 __vmalloc_area_node

- 1.3.3 map_vm_area

- 二、数据结构

- 2.1 struct vm_struct

- 2.2 struct vmap_area

- 三、vmalloc初始化

- 总结

- 参考资料

前言

物理上连续的内存映射对内核是最高效的,这样会充分利用高速缓存获得较低的平均访问时间,但是伴随着系统的运行,会出现大量物理内存碎片,系统可能在分配大块内存时,无法找到连续的物理内存页。

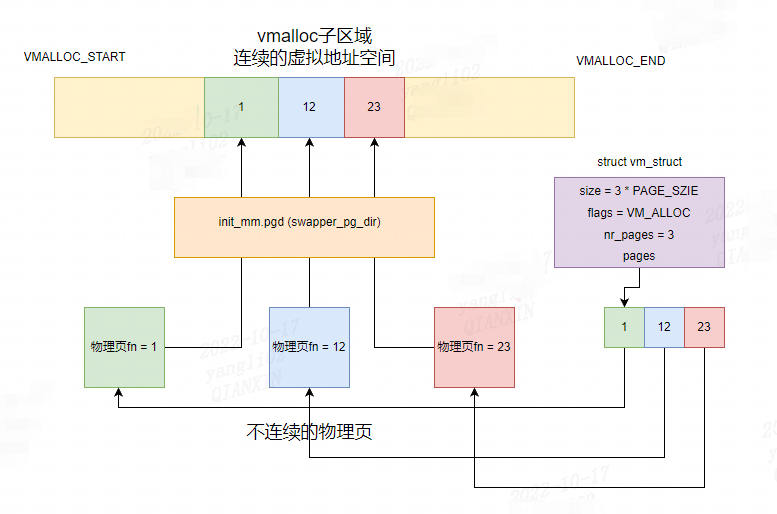

vmalloc用于在内核中分配物理上不连续但虚拟地址连续的内存空间。

主要用于内核模块的分配和I/O驱动程序分配缓冲区。

一、vmalloc 原理

1.1 vmalloc space

// linux-3.10/Documentation/x86/x86_64/mm.txt

Virtual memory map with 4 level page tables:

ffffc90000000000 - ffffe8ffffffffff (=45 bits) vmalloc/ioremap space

// linux-3.10/arch/x86/include/asm/pgtable_64_types.h

#define VMALLOC_START _AC(0xffffc90000000000, UL)

#define VMALLOC_END _AC(0xffffe8ffffffffff, UL)

1.2 vmalloc实现

// linux-3.10/mm/vmalloc.c

/**

* vmalloc - allocate virtually contiguous memory

* @size: allocation size

* Allocate enough pages to cover @size from the page level

* allocator and map them into contiguous kernel virtual space.

*

* For tight control over page level allocator and protection flags

* use __vmalloc() instead.

*/

void *vmalloc(unsigned long size)

{

return __vmalloc_node_flags(size, NUMA_NO_NODE,

GFP_KERNEL | __GFP_HIGHMEM);

}

EXPORT_SYMBOL(vmalloc);

vmalloc函数只有一个参数就是指定需要的内核虚拟地址空间的大小size,但是size的不能是字节只能是页。我已1页4K为例,该函数分配的内存大小是4K的整数倍。

GFP_KERNEL是内核中分配内存最常用的标志,这种分配可能会引起睡眠,阻塞,使用的普通优先级,用在可以重新安全调度的进程上下文中。因此不能在中断上下文中调用vmalloc,也不允许在不允许阻塞的地方调用。

__GFP_HIGHMEM表示尽量从物理内存区的高端内存区分配内存,x86_64没有高端内存区。

vmalloc在内核中最普遍的用处便是模块的实现,因为模块可以在任何时候加载,如果模块的数据比较多,那么无法保证有连续的物理内存,使用vmalloc便可以使用多块小的物理页(通常用多个1页物理页进行拼接)拼接出连续的内核虚拟地址空间。

// linux-3.10/mm/vmalloc.c

static inline void *__vmalloc_node_flags(unsigned long size,

int node, gfp_t flags)

{

return __vmalloc_node(size, 1, flags, PAGE_KERNEL,

node, __builtin_return_address(0));

}

// linux-3.10/mm/vmalloc.c

/**

* __vmalloc_node - allocate virtually contiguous memory

* @size: allocation size

* @align: desired alignment

* @gfp_mask: flags for the page level allocator

* @prot: protection mask for the allocated pages

* @node: node to use for allocation or NUMA_NO_NODE

* @caller: caller's return address

*

* Allocate enough pages to cover @size from the page level

* allocator with @gfp_mask flags. Map them into contiguous

* kernel virtual space, using a pagetable protection of @prot.

*/

static void *__vmalloc_node(unsigned long size, unsigned long align,

gfp_t gfp_mask, pgprot_t prot,

int node, const void *caller)

{

return __vmalloc_node_range(size, align, VMALLOC_START, VMALLOC_END,

gfp_mask, prot, node, caller);

}

vmalloc的核心函数就是__vmalloc_node_range:

其中的一些参数:

// linux-3.10/include/linux/numa.h

#define NUMA_NO_NODE (-1)

// linux-3.10/arch/x86/include/asm/pgtable_types.h

#define __PAGE_KERNEL (__PAGE_KERNEL_EXEC | _PAGE_NX)

#define PAGE_KERNEL __pgprot(__PAGE_KERNEL)

node = NUMA_NO_NODE

align = 1

gfp_mask = GFP_KERNEL | __GFP_HIGHMEM

start = VMALLOC_START

end = VMALLOC_END

prot = PAGE_KERNEL

caller = __builtin_return_address(0)

// linux-3.10/include/linux/vmalloc.h

/* bits in flags of vmalloc's vm_struct below */

#define VM_IOREMAP 0x00000001 /* ioremap() and friends */

#define VM_ALLOC 0x00000002 /* vmalloc() */

#define VM_MAP 0x00000004 /* vmap()ed pages */

#define VM_USERMAP 0x00000008 /* suitable for remap_vmalloc_range */

#define VM_VPAGES 0x00000010 /* buffer for pages was vmalloc'ed */

#define VM_UNLIST 0x00000020 /* vm_struct is not listed in vmlist */

/* bits [20..32] reserved for arch specific ioremap internals */

// linux-3.10/mm/vmalloc.c

/**

* __vmalloc_node_range - allocate virtually contiguous memory

* @size: allocation size

* @align: desired alignment

* @start: vm area range start

* @end: vm area range end

* @gfp_mask: flags for the page level allocator

* @prot: protection mask for the allocated pages

* @node: node to use for allocation or NUMA_NO_NODE

* @caller: caller's return address

*

* Allocate enough pages to cover @size from the page level

* allocator with @gfp_mask flags. Map them into contiguous

* kernel virtual space, using a pagetable protection of @prot.

*/

void *__vmalloc_node_range(unsigned long size, unsigned long align,

unsigned long start, unsigned long end, gfp_t gfp_mask,

pgprot_t prot, int node, const void *caller)

{

struct vm_struct *area;

void *addr;

unsigned long real_size = size;

size = PAGE_ALIGN(size);

if (!size || (size >> PAGE_SHIFT) > totalram_pages)

goto fail;

//寻找内核虚拟地址空间vmalloc区域

//VM_ALLOC表示使用vmlloc分配得到的页

//VM_UNLIST表示vm_struct不在vmlist全局链表中

area = __get_vm_area_node(size, align, VM_ALLOC | VM_UNLIST,

start, end, node, gfp_mask, caller);

if (!area)

goto fail;

//一页一页的分配物理页,建立与虚拟地址空间vmalloc区域之间的映射,

//为不连续的物理页创建页表,更新内核页表

addr = __vmalloc_area_node(area, gfp_mask, prot, node, caller);

if (!addr)

return NULL;

/*

* In this function, newly allocated vm_struct has VM_UNLIST flag.

* It means that vm_struct is not fully initialized.

* Now, it is fully initialized, so remove this flag here.

*/

clear_vm_unlist(area);

/*

* A ref_count = 3 is needed because the vm_struct and vmap_area

* structures allocated in the __get_vm_area_node() function contain

* references to the virtual address of the vmalloc'ed block.

*/

kmemleak_alloc(addr, real_size, 3, gfp_mask);

return addr;

fail:

warn_alloc_failed(gfp_mask, 0,

"vmalloc: allocation failure: %lu bytes\n",

real_size);

return NULL;

}

1.3 __vmalloc_node_range

1.3.1 __get_vm_area_node

static struct vm_struct *__get_vm_area_node(unsigned long size,

unsigned long align, unsigned long flags, unsigned long start,

unsigned long end, int node, gfp_t gfp_mask, const void *caller)

{

struct vmap_area *va;

struct vm_struct *area;

......

size = PAGE_ALIGN(size);

if (unlikely(!size))

return NULL;

//调用 kmalloc 分配 struct vm_struct 结构体

area = kzalloc_node(sizeof(*area), gfp_mask & GFP_RECLAIM_MASK, node);

if (unlikely(!area))

return NULL;

/*

* We always allocate a guard page.

*/

//分配一个guard page作为安全间隙

size += PAGE_SIZE;

//调用 kmalloc 分配 struct vmap_area 结构体

//将struct vmap_area 结构体添加到红黑树和链表中

va = alloc_vmap_area(size, align, start, end, node, gfp_mask);

if (IS_ERR(va)) {

kfree(area);

return NULL;

}

/*

* When this function is called from __vmalloc_node_range,

* we add VM_UNLIST flag to avoid accessing uninitialized

* members of vm_struct such as pages and nr_pages fields.

* They will be set later.

*/

//将struct vmap_area *va和struct vm_struct *area建立关联

if (flags & VM_UNLIST)

setup_vmalloc_vm(area, va, flags, caller);

else

insert_vmalloc_vm(area, va, flags, caller);

return area;

}

该函数主要用来在vmalloc区域(VMALLOC_START,VMALLOC_END)找到一个合适的位置,创建一个新的虚拟区域。

其中alloc_vmap_area函数比较重要:

static DEFINE_SPINLOCK(vmap_area_lock);

/* Export for kexec only */

LIST_HEAD(vmap_area_list);

static struct rb_root vmap_area_root = RB_ROOT;

/* The vmap cache globals are protected by vmap_area_lock */

static struct rb_node *free_vmap_cache;

/*

* Allocate a region of KVA of the specified size and alignment, within the

* vstart and vend.

*/

static struct vmap_area *alloc_vmap_area(unsigned long size,

unsigned long align,

unsigned long vstart, unsigned long vend,

int node, gfp_t gfp_mask)

{

struct vmap_area *va;

struct rb_node *n;

unsigned long addr;

int purged = 0;

struct vmap_area *first;

......

//调用 kmalloc 分配 一个 struct vmap_area结构体

va = kmalloc_node(sizeof(struct vmap_area),

gfp_mask & GFP_RECLAIM_MASK, node);

......

spin_lock(&vmap_area_lock);

/* find starting point for our search */

if (free_vmap_cache) {

first = rb_entry(free_vmap_cache, struct vmap_area, rb_node);

addr = ALIGN(first->va_end, align);

if (addr < vstart)

goto nocache;

if (addr + size - 1 < addr)

goto overflow;

} else {

addr = ALIGN(vstart, align);

if (addr + size - 1 < addr)

goto overflow;

n = vmap_area_root.rb_node;

first = NULL;

while (n) {

struct vmap_area *tmp;

tmp = rb_entry(n, struct vmap_area, rb_node);

if (tmp->va_end >= addr) {

first = tmp;

if (tmp->va_start <= addr)

break;

n = n->rb_left;

} else

n = n->rb_right;

}

if (!first)

goto found;

}

/* from the starting point, walk areas until a suitable hole is found */

// 在vmlloc区找到一个合适的内核虚拟地址空间vmlloc区域

while (addr + size > first->va_start && addr + size <= vend) {

if (addr + cached_hole_size < first->va_start)

cached_hole_size = first->va_start - addr;

addr = ALIGN(first->va_end, align);

if (addr + size - 1 < addr)

goto overflow;

if (list_is_last(&first->list, &vmap_area_list))

goto found;

first = list_entry(first->list.next,

struct vmap_area, list);

}

//在vmlloc区找到一个合适的内核虚拟地址空间vmlloc区域后,调用__insert_vmap_area

found:

if (addr + size > vend)

goto overflow;

va->va_start = addr;

va->va_end = addr + size;

va->flags = 0;

__insert_vmap_area(va);

free_vmap_cache = &va->rb_node;

spin_unlock(&vmap_area_lock);

return va;

......

}

从VMALLOC_START开始,从vmap_area_root这棵红黑树上查找,这个红黑树里存放着系统中正在使用的vmalloc区块。遍历左子叶节点找区间地址最小的区块。如果区块的开始地址等于VMALLOC_START,说明这区块是第一块vmalloc区域。如果红黑树没有一个节点,说明整个vmalloc区间都是空的。

查找每个存在的vmalloc区块的缝隙hole能否容纳目前要分配内存的大小。如果在已有vmalloc区块的缝隙中没能找到合适的hole。那么从最后一块vmalloc区块的结束地址开始一个新的vmalloc区域。

找到新的区块hole后,调用__insert_vmap_area函数把这个hole注册到红黑树中。

__insert_vmap_area将struct vmap_area *va加入全局的红黑树vmap_area_root和全局的链表vmap_area_list中:

/* Export for kexec only */

LIST_HEAD(vmap_area_list);

static struct rb_root vmap_area_root = RB_ROOT;

static void __insert_vmap_area(struct vmap_area *va)

{

struct rb_node **p = &vmap_area_root.rb_node;

struct rb_node *parent = NULL;

struct rb_node *tmp;

while (*p) {

struct vmap_area *tmp_va;

parent = *p;

tmp_va = rb_entry(parent, struct vmap_area, rb_node);

if (va->va_start < tmp_va->va_end)

p = &(*p)->rb_left;

else if (va->va_end > tmp_va->va_start)

p = &(*p)->rb_right;

else

BUG();

}

rb_link_node(&va->rb_node, parent, p);

// 将struct vmap_area *va加入全局的红黑树vmap_area_root

rb_insert_color(&va->rb_node, &vmap_area_root);

/* address-sort this list */

tmp = rb_prev(&va->rb_node);

if (tmp) {

struct vmap_area *prev;

prev = rb_entry(tmp, struct vmap_area, rb_node);

list_add_rcu(&va->list, &prev->list);

} else

//将struct vmap_area *va加入全局的链表vmap_area_list中

list_add_rcu(&va->list, &vmap_area_list);

}

1.3.2 __vmalloc_area_node

static void *__vmalloc_area_node(struct vm_struct *area, gfp_t gfp_mask,

pgprot_t prot, int node, const void *caller)

{

const int order = 0;

struct page **pages;

unsigned int nr_pages, array_size, i;

gfp_t nested_gfp = (gfp_mask & GFP_RECLAIM_MASK) | __GFP_ZERO;

//计算所需物理页的数目

nr_pages = (area->size - PAGE_SIZE) >> PAGE_SHIFT;

array_size = (nr_pages * sizeof(struct page *));

area->nr_pages = nr_pages;

/* Please note that the recursion is strictly bounded. */

if (array_size > PAGE_SIZE) {

pages = __vmalloc_node(array_size, 1, nested_gfp|__GFP_HIGHMEM,

PAGE_KERNEL, node, caller);

area->flags |= VM_VPAGES;

} else {

pages = kmalloc_node(array_size, nested_gfp, node);

}

area->pages = pages;

area->caller = caller;

if (!area->pages) {

remove_vm_area(area->addr);

kfree(area);

return NULL;

}

//调用alloc_page从当前node分配物理页(伙伴系统),是一页一页的分配不连续的物理页

for (i = 0; i < area->nr_pages; i++) {

struct page *page;

gfp_t tmp_mask = gfp_mask | __GFP_NOWARN;

//node = NUMA_NO_NODE = -1

if (node < 0)

//alloc_page():buddy allocator接口,分配一页物理页

page = alloc_page(tmp_mask);

else

page = alloc_pages_node(node, tmp_mask, order);

if (unlikely(!page)) {

/* Successfully allocated i pages, free them in __vunmap() */

area->nr_pages = i;

goto fail;

}

area->pages[i] = page;

}

//将分配的物理页依次映射到连续的内核虚拟地址空间vmalloc区域

if (map_vm_area(area, prot, &pages))

goto fail;

return area->addr;

fail:

warn_alloc_failed(gfp_mask, order,

"vmalloc: allocation failure, allocated %ld of %ld bytes\n",

(area->nr_pages*PAGE_SIZE), area->size);

vfree(area->addr);

return NULL;

}

#define alloc_page(gfp_mask) alloc_pages(gfp_mask, 0)

order = 0,代表从伙伴系统接口分配一页物理页。

该函数调用alloc_page从当前node分配物理页,物理页来自于伙伴系统。vmalloc是为了分配大块物理内存,但是由于内存碎片的缘故,内存块的页帧可能不是连续的,为了分配大块的物理内存,因此分配单元尽可能的小,也就是一页物理页。vmalloc是一页一页的分配物理页,这样就可以保证即使有很多物理页碎片,vmalloc分配大块内存仍然可以成功。

调用buddy allocator接口alloc_page()每次获取一个物理页框填入到vm_struct中的struct page* 数组。

然后调用map_vm_area将分配的物理页依次映射到连续的内核虚拟地址空间vmalloc区域。

1.3.3 map_vm_area

int map_vm_area(struct vm_struct *area, pgprot_t prot, struct page ***pages)

{

unsigned long addr = (unsigned long)area->addr;

unsigned long end = addr + area->size - PAGE_SIZE;

int err;

err = vmap_page_range(addr, end, prot, *pages);

if (err > 0) {

*pages += err;

err = 0;

}

return err;

}

EXPORT_SYMBOL_GPL(map_vm_area);

static int vmap_page_range(unsigned long start, unsigned long end,

pgprot_t prot, struct page **pages)

{

int ret;

ret = vmap_page_range_noflush(start, end, prot, pages);

flush_cache_vmap(start, end);

return ret;

}

/*

* Set up page tables in kva (addr, end). The ptes shall have prot "prot", and

* will have pfns corresponding to the "pages" array.

*

* Ie. pte at addr+N*PAGE_SIZE shall point to pfn corresponding to pages[N]

*/

static int vmap_page_range_noflush(unsigned long start, unsigned long end,

pgprot_t prot, struct page **pages)

{

pgd_t *pgd;

unsigned long next;

unsigned long addr = start;

int err = 0;

int nr = 0;

BUG_ON(addr >= end);

//获取内核页表init_mm.pgd = swapper_pg_dir

pgd = pgd_offset_k(addr);

do {

next = pgd_addr_end(addr, end);

//填充内核页表的页上级目录pud,将其物理地址写入页上级目录项中

err = vmap_pud_range(pgd, addr, next, prot, pages, &nr);

if (err)

return err;

} while (pgd++, addr = next, addr != end);

return nr;

}

// linux-3.10/arch/x86/include/asm/pgtable.h

/*

* a shortcut which implies the use of the kernel's pgd, instead

* of a process's

*/

#define pgd_offset_k(address) pgd_offset(&init_mm, (address))

调用pgd_offset_k获取内核页表init_mm.pgd ( swapper_pg_dir),建立物理页与内核虚拟地址空间之间的映射,分配PUD,PMD,PTE更新内核页表。但并不会将更新的内核页表同步到用户进程的页表中,这就是lazy机制。只有发生了对应的缺页异常后,才会将更新的内核页表同步到用户进程的页表。

vmalloc缺页异常请参考:Linux vmalloc缺页异常处理

调用pud_alloc、pmd_alloc、pte_alloc_kernel分配PUD,PMD,PTE。

以下为上面分配的不连续的物理页建立内核页表映射,填充内核页表,获取到内核全局目录项后,一直循环,为不连续的物理页填充内核页表。

循环结束的条件:指向非连续物理内存区的所有页表项全被建立。

static int vmap_pte_range(pmd_t *pmd, unsigned long addr,

unsigned long end, pgprot_t prot, struct page **pages, int *nr)

{

pte_t *pte;

/*

* nr is a running index into the array which helps higher level

* callers keep track of where we're up to.

*/

//分配一个新的内核页表项:pte_t *pte

pte = pte_alloc_kernel(pmd, addr);

if (!pte)

return -ENOMEM;

//循环结束的条件:指向非连续物理内存区的所有页表项全被建立

do {

//pages[*nr]为之前分配的物理页数组

struct page *page = pages[*nr];

if (WARN_ON(!pte_none(*pte)))

return -EBUSY;

if (WARN_ON(!page))

return -ENOMEM;

//填充内核页表的页表项pte,将其物理地址写入页表项中

//填充页表项,即:更新内核页表项,把物理页的物理地址写入页表项中

set_pte_at(&init_mm, addr, pte, mk_pte(page, prot));

(*nr)++;

} while (pte++, addr += PAGE_SIZE, addr != end);

return 0;

}

static int vmap_pmd_range(pud_t *pud, unsigned long addr,

unsigned long end, pgprot_t prot, struct page **pages, int *nr)

{

pmd_t *pmd;

unsigned long next;

pmd = pmd_alloc(&init_mm, pud, addr);

if (!pmd)

return -ENOMEM;

//循环结束的条件:指向非连续物理内存区的所有页表项全被建立

do {

next = pmd_addr_end(addr, end);

填充内核页表的页中级目录pmd,将其物理地址写入页中级目录项中

if (vmap_pte_range(pmd, addr, next, prot, pages, nr))

return -ENOMEM;

} while (pmd++, addr = next, addr != end);

return 0;

}

static int vmap_pud_range(pgd_t *pgd, unsigned long addr,

unsigned long end, pgprot_t prot, struct page **pages, int *nr)

{

pud_t *pud;

unsigned long next;

//分配一个页上级目录

pud = pud_alloc(&init_mm, pgd, addr);

if (!pud)

return -ENOMEM;

//循环结束的条件:指向非连续物理内存区的所有页表项全被建立

do {

next = pud_addr_end(addr, end);

填充内核页表的页中级目录pmd,将其物理地址写入页中级目录项中

if (vmap_pmd_range(pud, addr, next, prot, pages, nr))

return -ENOMEM;

} while (pud++, addr = next, addr != end);

return 0;

}

调用map_vm_area时,传入分配的物理页数组struct page **pages:将其最终传入到vmap_pte_range函数中,分配PTE,调用set_pte_at(&init_mm, addr, pte, mk_pte(page, prot)),创建新的页表项,将新页框的物理地址写入页表,建立pte_t *pte与struct page *page之间的关联。也就是建立新创建的页表项与物理页之间的关联。

static int vmap_pte_range(pmd_t *pmd, unsigned long addr,

unsigned long end, pgprot_t prot, struct page **pages, int *nr)

{

pte_t *pte;

/*

* nr is a running index into the array which helps higher level

* callers keep track of where we're up to.

*/

pte = pte_alloc_kernel(pmd, addr);

if (!pte)

return -ENOMEM;

do {

struct page *page = pages[*nr];

if (WARN_ON(!pte_none(*pte)))

return -EBUSY;

if (WARN_ON(!page))

return -ENOMEM;

set_pte_at(&init_mm, addr, pte, mk_pte(page, prot));

(*nr)++;

} while (pte++, addr += PAGE_SIZE, addr != end);

return 0;

}

与上述相反的一系列函数操作集(vunmap,释放相关的区域,从内核页表中删除不再需要的项,与分配内存时相似,也要操作各级页表):

/*** Page table manipulation functions ***/

static void vunmap_pte_range(pmd_t *pmd, unsigned long addr, unsigned long end)

{

pte_t *pte;

pte = pte_offset_kernel(pmd, addr);

do {

pte_t ptent = ptep_get_and_clear(&init_mm, addr, pte);

WARN_ON(!pte_none(ptent) && !pte_present(ptent));

} while (pte++, addr += PAGE_SIZE, addr != end);

}

static void vunmap_pmd_range(pud_t *pud, unsigned long addr, unsigned long end)

{

pmd_t *pmd;

unsigned long next;

pmd = pmd_offset(pud, addr);

do {

next = pmd_addr_end(addr, end);

if (pmd_none_or_clear_bad(pmd))

continue;

vunmap_pte_range(pmd, addr, next);

} while (pmd++, addr = next, addr != end);

}

static void vunmap_pud_range(pgd_t *pgd, unsigned long addr, unsigned long end)

{

pud_t *pud;

unsigned long next;

pud = pud_offset(pgd, addr);

do {

next = pud_addr_end(addr, end);

if (pud_none_or_clear_bad(pud))

continue;

vunmap_pmd_range(pud, addr, next);

} while (pud++, addr = next, addr != end);

}

static void vunmap_page_range(unsigned long addr, unsigned long end)

{

pgd_t *pgd;

unsigned long next;

BUG_ON(addr >= end);

pgd = pgd_offset_k(addr);

do {

next = pgd_addr_end(addr, end);

if (pgd_none_or_clear_bad(pgd))

continue;

vunmap_pud_range(pgd, addr, next);

} while (pgd++, addr = next, addr != end);

}

二、数据结构

vmalloc函数实现过程,涉及到最重要的两个数据结构就是struct vm_struct和struct vmap_area。

这两个结构提分配都是调用kmalloc进行内存分配,也就是调用的通用的sla(u)分配器。

2.1 struct vm_struct

对于内核用vmalloc分配的一段连续的虚拟地址空间区域,都用一个 struct vm_struct 结构体来管理。

// linux-3.10/include/linuxvmalloc.h

struct vm_struct {

struct vm_struct *next;

void *addr;

unsigned long size;

unsigned long flags;

struct page **pages;

unsigned int nr_pages;

phys_addr_t phys_addr;

const void *caller;

};

| 成员 | 描述 |

|---|---|

| next | 使得内核可以将vmalloc区域中的所有子区域保存在一个单链表上 |

| addr | 定义了分配的子区域在虚拟地址空间中的起始地址。size表示该子区域的长度. 可以根据该信息来勾画出vmalloc区域的完整分配方案 |

| size | 虚拟地址空间区域大小,该子区域的长度 |

| flags | 存储了与该内存区关联的标志集合, 这几乎是不可避免的. 它只用于指定内存区类型 |

| pages | 是一个指针,指向page指针的数组。每个数组成员都表示一个映射到虚拟地址空间中的物理内存页的page实例 |

| nr_pages | 指定pages中数组项的数目,即涉及的内存页数目 |

| phys_addr | 仅当用ioremap映射了由物理地址描述的物理内存区域时才需要。该信息保存在phys_addr中 |

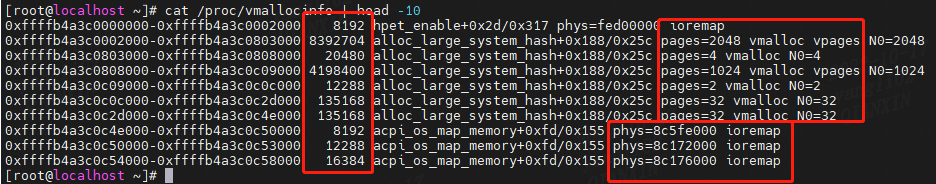

从/proc/vmallocinfo文件中我们可以看到vmalloc子区域都是页的整数倍大小,当用ioremap映射了由物理地址描述的物理内存区域时,会将物理地址保存再phys_addr成员中。

其中flags字段可以的值为:

// linux-3.10/include/linux/vmalloc.h

/* bits in flags of vmalloc's vm_struct below */

#define VM_IOREMAP 0x00000001 /* ioremap() and friends */

#define VM_ALLOC 0x00000002 /* vmalloc() */

#define VM_MAP 0x00000004 /* vmap()ed pages */

#define VM_USERMAP 0x00000008 /* suitable for remap_vmalloc_range */

#define VM_VPAGES 0x00000010 /* buffer for pages was vmalloc'ed */

#define VM_UNLIST 0x00000020 /* vm_struct is not listed in vmlist */

/* bits [20..32] reserved for arch specific ioremap internals */

使用vmalloc时通常为VM_ALLOC标志。

2.2 struct vmap_area

在创建一个新的虚拟内存区之前,必须要找到一个适合的虚拟地址空间区域。struct vmap_area用于描述这块虚拟地址空间区域:vmalloc子区域。

struct vmap_area {

unsigned long va_start;

unsigned long va_end;

unsigned long flags;

struct rb_node rb_node; /* address sorted rbtree */

struct list_head list; /* address sorted list */

struct list_head purge_list; /* "lazy purge" list */

struct vm_struct *vm;

struct rcu_head rcu_head;

};

| 成员 | 描述 |

|---|---|

| va_start | vmalloc子区域的虚拟区间起始地址 |

| va_end | vmalloc子区域的虚拟区间结束地址 |

| flags | 类型标识 |

| rb_node | 插入红黑树vmap_area_root的节点 |

| list | 用于加入链表vmap_area_list的节点 |

| purge_list | 用于加入到全局链表vmap_purge_list中 |

| vm | 指向对应的vm_struct |

flags类型,通常为VM_VM_AREA

/*** Global kva allocator ***/

#define VM_LAZY_FREE 0x01

#define VM_LAZY_FREEING 0x02

#define VM_VM_AREA 0x04

struct vmap_area用于描述一段虚拟地址的区域,从结构体中va_start/va_end也能看出来。同时该结构体会通过rb_node挂在红黑树上,通过list挂在链表上。

struct vmap_area中vm字段是struct vm_struct结构,用于管理虚拟地址和物理页之间的映射关系,可以将struct vm_struct构成一个链表,维护多段映射。

三、vmalloc初始化

asmlinkage void __init start_kernel(void)

{

mm_init();

}

/*

* Set up kernel memory allocators

*/

static void __init mm_init(void)

{

/*

* page_cgroup requires contiguous pages,

* bigger than MAX_ORDER unless SPARSEMEM.

*/

page_cgroup_init_flatmem();

mem_init();

kmem_cache_init();

percpu_init_late();

pgtable_cache_init();

vmalloc_init();

}

伙伴系统和sla(u)b分配器初始化完成后再调用vmalloc_init,因此vmalloc中调用了kmalloc接口(主要用来为struct vm_struct和struct vmap_area分配内存)。

static struct vm_struct *vmlist __initdata;

void __init vmalloc_init(void)

{

struct vmap_area *va;

struct vm_struct *tmp;

int i;

for_each_possible_cpu(i) {

struct vmap_block_queue *vbq;

struct vfree_deferred *p;

vbq = &per_cpu(vmap_block_queue, i);

spin_lock_init(&vbq->lock);

INIT_LIST_HEAD(&vbq->free);

p = &per_cpu(vfree_deferred, i);

init_llist_head(&p->list);

INIT_WORK(&p->wq, free_work);

}

/* Import existing vmlist entries. */

for (tmp = vmlist; tmp; tmp = tmp->next) {

//为已经存在的struct vm_struct分配对应的struct vmap_area

va = kzalloc(sizeof(struct vmap_area), GFP_NOWAIT);

//初始化struct vmap_area

va->flags = VM_VM_AREA;

va->va_start = (unsigned long)tmp->addr;

va->va_end = va->va_start + tmp->size;

va->vm = tmp;

__insert_vmap_area(va);

}

vmap_area_pcpu_hole = VMALLOC_END;

vmap_initialized = true;

}

vmlist是一个全局的vm_struct数据结构,代码主要就是遍历vmlist链表,为每一个struct vm_struct创建对应的struct vmap_area,并初始化,添加到全局的红黑树vmap_area_root和链表vmap_area_list中。所有通过vmap、vmlianalloc等接口分配的虚拟地址空间vm_area都会挂在这个红黑树vmap_area_root和链表vmap_area_list中。

总结

(1)在vmalloc区域(VMALLOC_START,VMALLOC_END)找到一个合适的位置,创建一个新的虚拟区域。

(2)调用buddy allocator接口:alloc_page(),分配一页一页的物理页。

(3)建立内核虚拟地址空间vmalloc区域于物理页之间的映射,分配内核页表的PUD,PMD,PTE,更新内核页表。

(4)由于是一页一页分配的物理页,因此建立映射时也要一一建立映射,会导致比直接内存映射大的多TLB抖动,这样就不能充分利用缓存了,影响查询页表效率。

参考资料

Linux 3.10.0

https://www.cnblogs.com/LoyenWang/p/11965787.html

https://blog.csdn.net/u012489236/article/details/108313150

https://blog.csdn.net/weixin_42262944/article/details/119272588

https://kernel.blog.csdn.net/article/details/52705111